AOMedia Research Workshop Europe 2023

AOMedia Research Workshop Europe 2023 (Monday, June 19)

Welcome to the AOMedia Research Workshop Europe 2023, which will be held in conjunction with QoMEX 2023 in the historic city of Ghent, Belgium this June. The symposium is organized by the Alliance for Open Media (AOMedia), an organization dedicated to the development of open, royalty-free technologies for media compression and delivery over the web.

The AOMedia Research Workshop aims to provide a forum for AOMedia member companies and leading academics to share their latest research results and discuss new ideas on media codecs and media processing, and industry applications of these technologies.. The goal is to foster collaboration between AOMedia member companies and the global academic community, with the goal of driving future media standards and formats.

This year marks the first time that the AOMedia Research Workshop comes to Europe. Collocated with QoMEX 2023, this year’s workshop aims to encourage the close interaction between media technology experts and quality of experience experts, bringing new perspectives to each other’s communities.

Confirmed attending member companies include Amazon, Ateme, Google, Meta, Netflix and Visionular.

Organizing Committee

Anne Aaron (Netflix, USA)

Andrey Norkin (Netflix, USA)

Zhi Li (Netflix, USA)

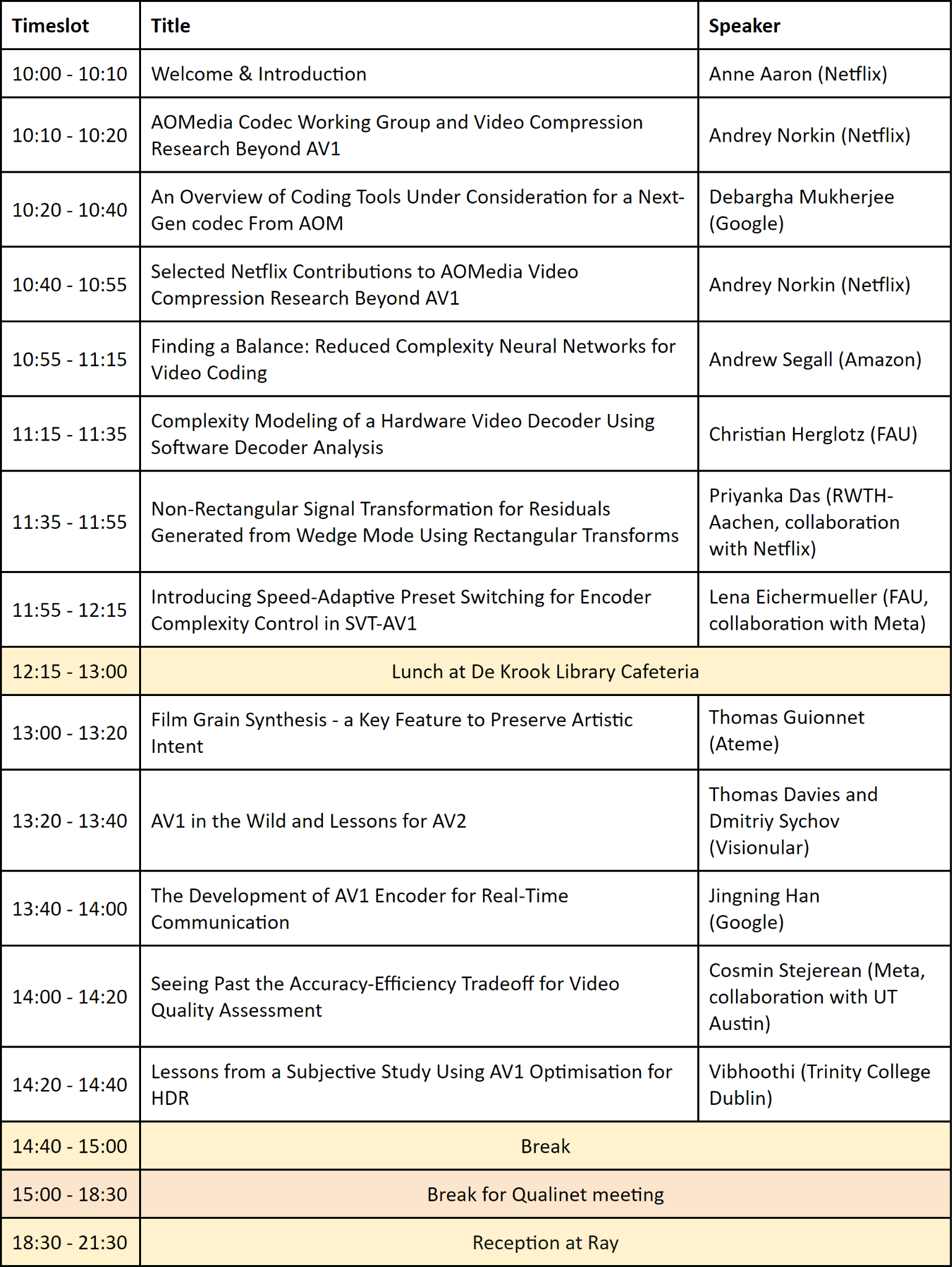

Workshop schedule

10:00 - 10:20 Welcome & introduction

10:20 - 12:15 Presentations

12:15 - 13:00 Lunch at De Krook Library Cafeteria

13:00 - 14:40 Presentations

14:40 - 15:00 Break

(15:00 - 18:30) (Break for Qualinet meeting)

17:30 - 19:00 Walking Guided tour

19:00 - 22:00 Reception at Ray

Technical program

Presentation abstracts

An Overview of Coding Tools Under Consideration for a Next-Gen codec From AOM

Alliance for Open Media (AOM) is currently developing a next-generation video codec beyond AV1. The new codec aims to achieve 40% compression gain over its predecessor AV1. This talk will provide a brief overview of the most innovative tools under consideration, present coding results on the latest Anchor, and discuss ongoing activities and future plans.

Selected Netflix Contributions to AOMedia Video Compression Research Beyond AV1

Netflix is a founding member of AOMedia and actively participated in the development of the AV1 video codec. Recently, the Netflix video codec team has been contributing to the AOMedia research efforts beyond AV1. This talk will focus on selected Netflix team contributions to the research beyond AV1, such as deblocking filtering and intra prediction.

Finding a Balance: Reduced Complexity Neural Networks for Video Coding

Integrating neural networks into future video coding standards has been shown to provide significant improvements in coding efficiency. Unfortunately, the computational complexity associated with the neural network, specifically the number of multiply-accumulate (MAC) operations, makes many of these approaches intractable in practice. In this talk, we focus on using neural networks as an in-loop filter and provide a brief summary of recent work. We then introduce our efforts to reduce network complexity while preserving coding efficiency. Ablation studies are shown in the context of the developing AV2 standard.

Complexity Modeling of a Hardware Video Decoder Using Software Decoder Analysis

During the standardization of a video codec, the area and power costs of a hardware implementation are unknown. Consequently, hardware encoder and decoder implementations have area and power costs that are unpredictable. To overcome this problem, we show multiple strategies to estimate the hardware-decoder complexity by using software decoders. By doing so, we analyze and measure the energy demand of several software decoders and propose new energy models. Finally, we are able to predict the energy demand of a hardware decoder with a precision of 4.50% by solely using software decoder profiling.

Non-Rectangular Signal Transformation for Residuals Generated from Wedge Mode Using Rectangular Transforms

Wedge mode is adopted in AV1 for the compound prediction mode where the signal can be predicted by combining two inter-prediction signals or one inter- and one intra-prediction signal using non-rectangular smooth masks. This generates two residual signals from different sources, which are transformed together as a rectangular block. We propose a transformation method where the residuals from different sources are separated and transformed individually using a sparse coding technique with partitioned 2D DCT bases of closest rectangular size. The coefficients are encoded with a scaling factor that mimics the behavior of extending the signal to a rectangular shape. The benefit of such a technique is that, at the decoder, the regular inverse transformation is applied to the coefficients, and the extended signal is ignored. Hence, minimum changes are required at the decoder.

Introducing Speed-Adaptive Preset Switching for Encoder Complexity Control in SVT-AV1

The growth in video streaming traffic during the last decade requires ongoing improvements in video compression technologies, especially efficient encoders. Recent advances in this field, however, come at the cost of increasingly higher computational demands which motivates the deployment of complexity control in encoding to achieve a reasonable trade-off between compression performance and processing time.

We introduce a complexity control mechanism in SVT-AV1 by using speed-adaptive preset switching (SAPS) to comply with the remaining time budget. Additionally, an analytical model is presented that estimates the encoding time in dependency of preset, quantization parameter, and video metadata. Our work enables encoding with a user-defined encoding time constraint within the complete preset range without introducing any additional latencies.

Film Grain Synthesis – a Key Feature to Preserve Artistic Intent

Originally a side effect of the technology, film grain is now perceived as appealing and essential to the look and feel of motion pictures. Abundant in movies and series, its aesthetics is part of the creative intent and needs to be preserved while encoding. However, due to its random and unpredictable nature, it is particularly challenging to compress. In this talk, it is shown how to preserve film grain during video compression by leveraging both perceptually driven pre-processing and core video compression techniques. AV1 specification includes a film grain synthesis approach. It is shown that, when estimating correctly film grain parameters, film grain can be preserved visually while reducing the bitrate by 50% to 90 %. As for codecs where no film grain synthesis is specified, it is demonstrated that it is nevertheless possible to preserve film grain aspect by slightly modifying grain repartition and by tuning the core encoder accordingly. AV1 is the first to standardize film grain synthesis as a mandatory tools, while other standardization are revamping the topic.

AV1 in the Wild and Lessons for AV2

Visionular was founded in 2018 to provide next-generation video encoding for AV1, HEVC and H264. In this talk we will present our experience of deploying AV1 for VoD, live streaming and RTC. We will talk about AV1 uptake and how AV1 compares in the “green”, computationally aware video economy. What features have driven AV1 adoption to date and are most valued by customers? Looking forward to AV2, what features will help drive success in the future? We will discuss how AV1 features and tools could be improved and the key challenges for the next generation in the light of practical customer experience.

The Development of AV1 Encoder for Real-Time Communication

The AV1 real-time encoder has recently been made substantially faster and more efficient in compression at the same time. As of the latest libaom release, it is capable of running at the same speed or faster than the VP9 RTC encoder in production settings, while producing significantly better compression efficiency and visual quality. The AV1 RTC encoder is being deployed in large scale services such as Google Meet, Chrome Remote Desktop, where it receives highly positive feedback. In this talk, we will cover the design principles and various practical considerations behind its development.

Seeing Past the Accuracy-Efficiency Tradeoff for Video Quality Assessment

State-of-the-art video quality models such as SSIM and VMAF have seen wide adoption over the years, particularly in the context of large-scale video delivery, i.e., streaming and video codec development. VMAF is a computationally intensive quality model that provides accurate estimates of quality, while PSNR and SSIM are efficient, yet less accurate, alternatives. In this talk, we will present the novel FUNQUE suite of models that break past this accuracy-efficiency tradeoff by designing fusion-based quality models that are both more efficient and more accurate. We demonstrate the efficacy of the FUNQUE framework by designing and evaluating quality models for both HD video and 4K HDR video-on-demand applications.

Lessons from a Subjective Study Using AV1 Optimisation for HDR

High Dynamic Range (HDR) devices and content have gained traction in the consumer space as it delivers an enhanced quality of experience. At the same time, the complexity of codecs is growing. This has driven the development of tools for content-adaptive optimisation that achieve optimal rate-distortion performance for HDR video at 4K resolution. While improvements of just a few percentage points in BDRATE (1-5%) are significant for the streaming media industry, the impact on subjective quality has been less studied especially for HDR/AV1.

In this talk, we highlight our key findings and takeaways after conducting a subjective quality assessment of a per-clip optimisation strategy with help of tuning Lambda in the AV1 encoder[1]. The study was conducted with Double stimuli protocol (DSQCS) with 42 subjects. We correlate these subjective scores with existing objective metrics used in standards development and show that some perceptual metrics correlate surprisingly well even though they are not tuned for HDR. We also highlight the complexity of setting up a controlled subjective testing environment for 4K HDR playback with AV1 and the things to consider for a subjective study with AV1 and HDR[2]. We find that the DSQCS protocol is insufficient to categorically compare the methods but the data allows us to make recommendations about the use of experts vs non-experts in HDR studies for improving next-generation AOM codecs.